Abstract

The recent advances in camera-based bird's eye view (BEV) representation exhibit great potential for in-vehicle 3D perception. Despite the substantial progress achieved on standard benchmarks, the robustness of BEV algorithms has not been thoroughly examined, which is critical for safe operations. To bridge this gap, we introduce RoboBEV, a comprehensive benchmark suite that encompasses eight distinct corruptions, including Bright, Dark, Fog, Snow, Motion Blur, Color Quant, Camera Crash, and Frame Lost. Based on it, we undertake extensive evaluations across a wide range of BEV-based models to understand their resilience and reliability. Our findings indicate a strong correlation between absolute performance on in-distribution and out-of-distribution datasets. Nonetheless, there are considerable variations in relative performance across different approaches. Our experiments further demonstrate that pre-training and depth-free BEV transformation has the potential to enhance out-of-distribution robustness. Additionally, utilizing long and rich temporal information largely helps with robustness. Our findings provide valuable insights for designing future BEV models that can achieve both accuracy and robustness in real-world deployments.

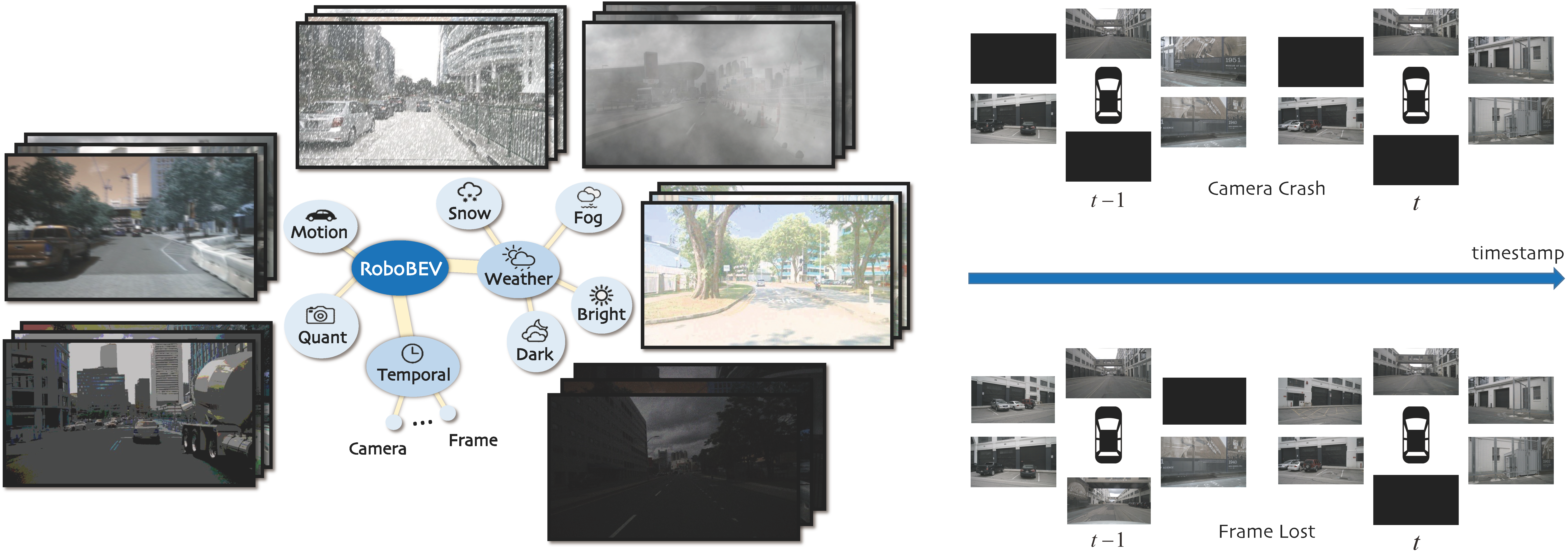

Taxonomy

We simulate eight corruption types from three categories:

1) Severe weather conditions;

2) Image distortions that are caused by motion or quantization;

3) Camera failure, including camera crash and frame lost.

Each corruption is further split into three severities (easy, moderate, and hard).

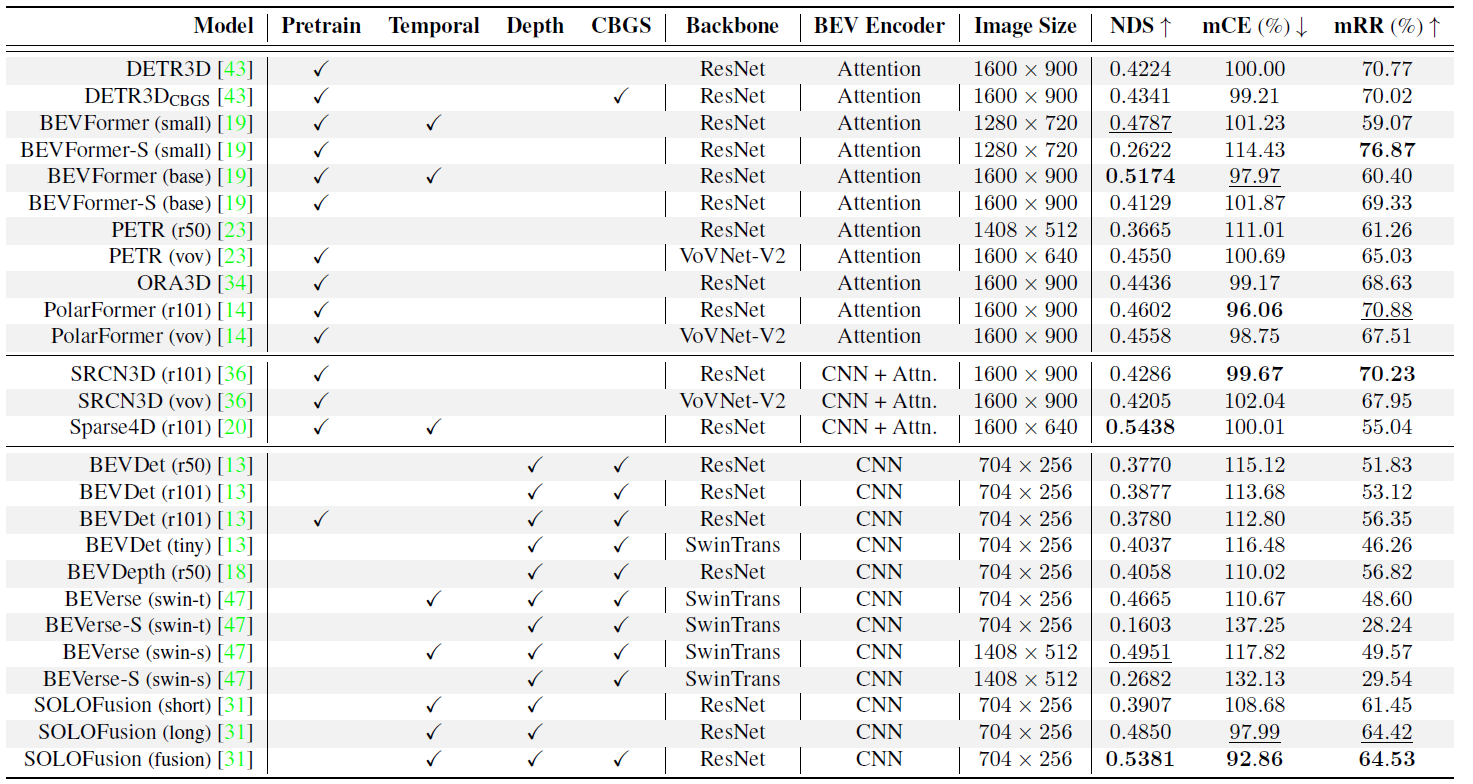

Benchmark

Benchmarking results of 26 Camera-based bird's eye view (BEV) models on the nuScenes-C datasets across 8 corruptions under 3 severities. To further investigate the behavior of the model's robustness, we break down BEV detectors into various components:

(1) Training strategy (e.g., FCOS3D pretraining and CBGS);

(2) Model architecture (e.g., backbone);

(3) Approach pipeline (e.g., temporal cue learning and depth estimation).

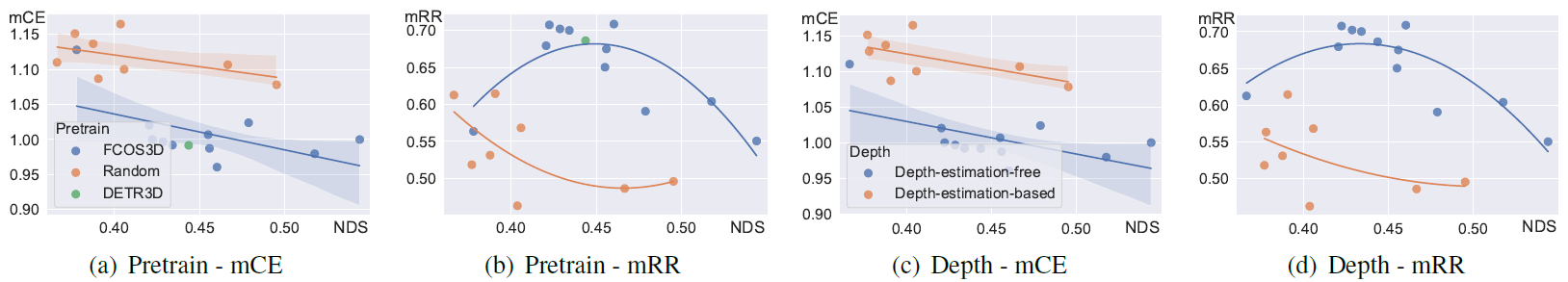

We find pre-training and depth-free BEV transformation has the potential to enhance out-of-distribution robustness.

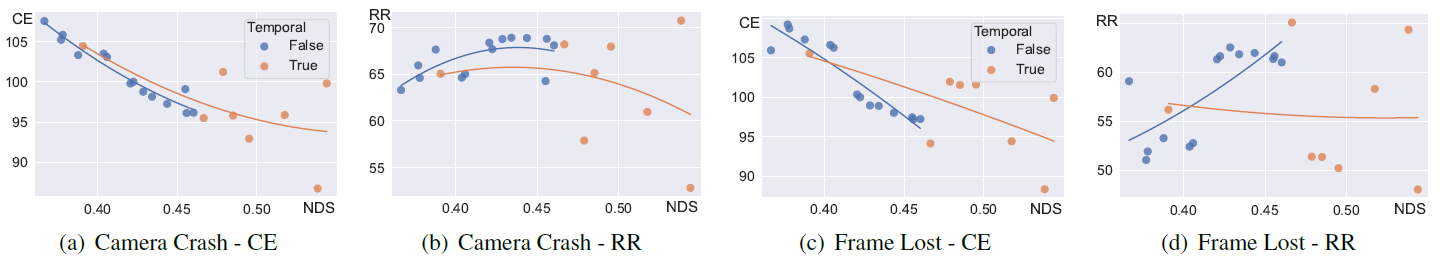

We further find temporal fusion has the potential to yield better absolute performance under corruptions. Fusing longer temporal information largely helps with robustness for Camera Crash and Frame Lost.

Dataset

The nuScenes-C dataset can be downloaded on OpenDataLab.

Future Works

We will incorporate Unsupervised Domain Adaptation (UDA) into RoboBEV, stay tuned!

BibTeX

@article{xie2023robobev,

title = {RoboBEV: Towards Robust Bird's Eye View Perception under Corruptions},

author = {Xie, Shaoyuan and Kong, Lingdong and Zhang, Wenwei and Ren, Jiawei and Pan, Liang and Chen, Kai and Liu, Ziwei},

journal = {arXiv preprint arXiv:2304.06719},

year = {2023}

}